LocalAI

LocalAI ist die kostenlose Open-Source-Alternative zu OpenAI. Diese Anleitung zeigt, wie Sie LocalAI mit ONLYOFFICE-Editoren unter Linux verbinden.

Bitte beachten Sie, dass die Installation auch über Docker möglich ist. Weitere Informationen dazu finden Sie in der offiziellen Local AI-Anleitung.

Schritt 1: LocalAI installieren

Hardwareanforderungen

- CPU: Ein Multicore-Prozessor.

- RAM: Mindestens 8 GB sind erforderlich; 16 GB oder mehr werden empfohlen.

- Speicher: SSD-Speicher wird empfohlen. Mindestens 20 GB sind erforderlich.

- Netzwerk: LocalAI funktioniert ohne Internetverbindung. Zum Herunterladen von Modellen und Anwenden von Updates wird jedoch eine zuverlässige Verbindung empfohlen.

Installation

curl https://localai.io/install.sh | shSchritt 2: Installieren des erforderlichen Modells

Verwenden Sie den folgenden Befehl, um das erforderliche Modell zu installieren:

local-ai models install name_of_the_modelwobei name_of_the_model der Name des benötigten Modells ist.

Erfahren Sie mehr über die verfügbaren Modelle auf der offiziellen LocalAI-Website.

Schritt 3: Starten von LocalAI mit dem Cors-Flag

Dieser Schritt ist notwendig, um Ihren KI-Assistenten nicht nur lokal, sondern auch im Web zu verwenden.

local-ai run --corsAlternativ können Sie der Datei /etc/localai.env die folgende Zeile hinzufügen:

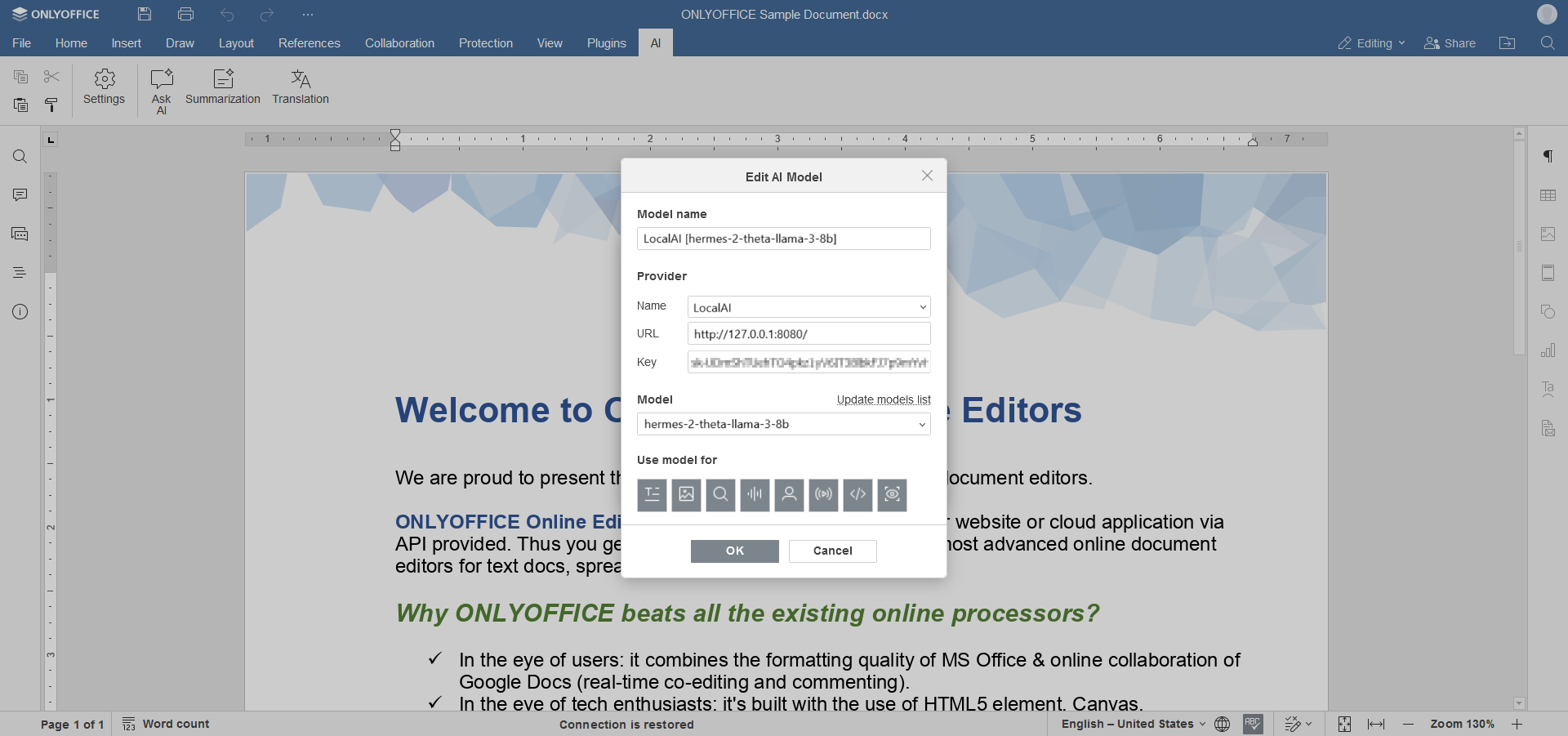

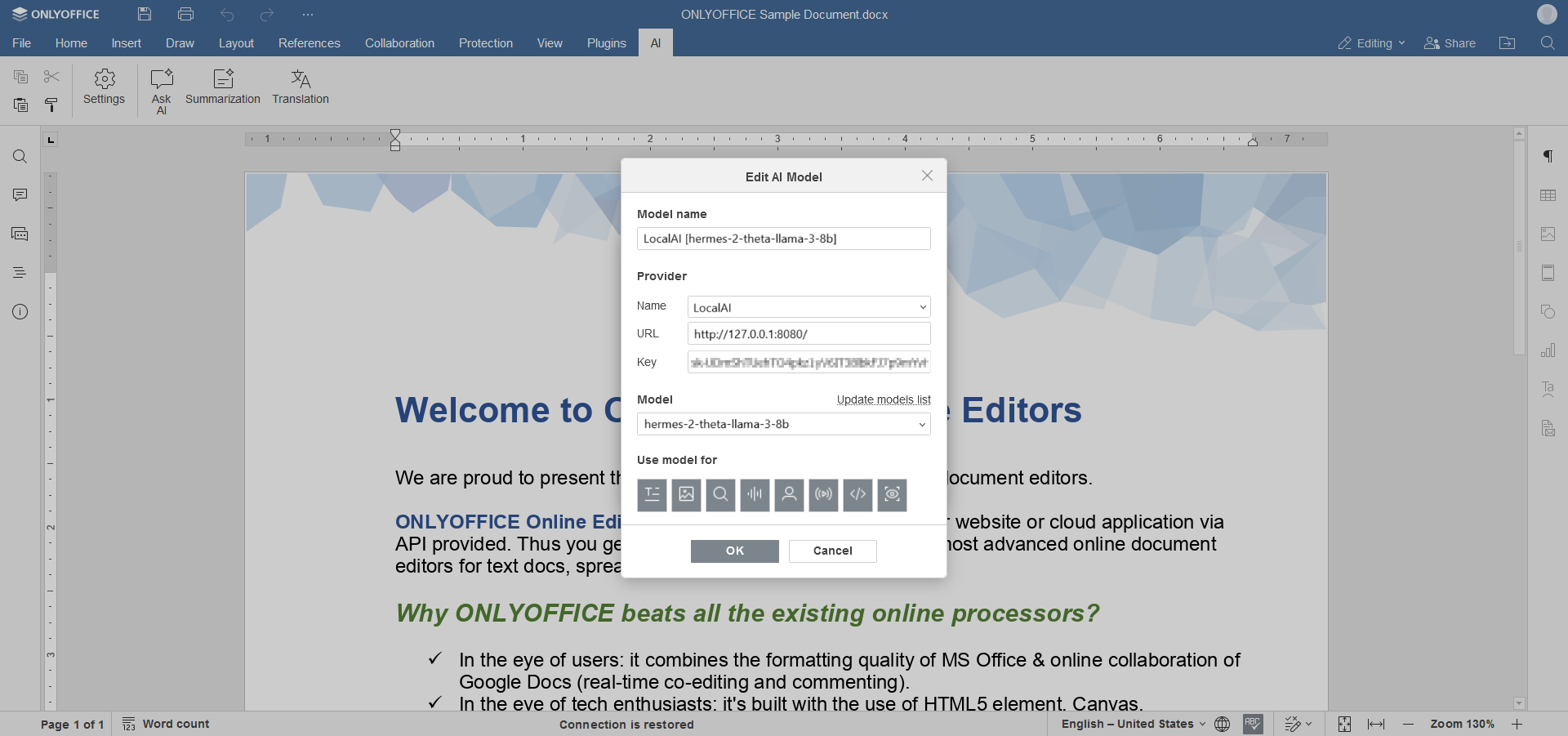

CORSALLOWEDORIGINS = "*"Schritt 4: Konfigurieren der KI-Plugin-Einstellungen in ONLYOFFICE

- Bitte beachten Sie für die Ersteinrichtung unsere Konfigurationsanleitung.

- Nachdem Sie Ihre LocalAI mit den ONLYOFFICE-Editoren verbunden haben, geben Sie LocalAI als Anbieternamen an.

- Geben Sie die URL

http://127.0.0.1:8080ein, wenn Sie sie beim Starten von local-ai nicht geändert haben. - Die Modelle werden automatisch in die Liste geladen – wählen Sie das Modell aus, das Sie zuvor in Schritt 2 dieser Anleitung ausgewählt haben.

-

Klicken Sie auf OK, um die Einstellungen zu speichern.

Hosten ONLYOFFICE Docs auf Ihrem eigenen Server oder nutzen Sie es in der Cloud

Artikel zum Thema:

Alle Schlagwörter